Introduction

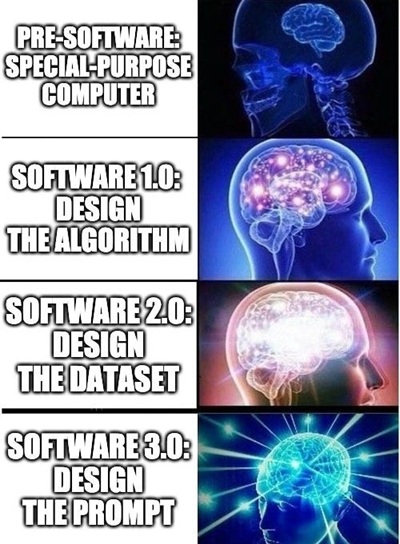

A former Tesla AI Director Andrej Karpathy delivers a masterful analysis of how artificial intelligence is fundamentally reshaping software development. Speaking to an audience of students about to enter the industry, Karpathy argues that we’re witnessing the most significant transformation in software since its inception 70 years ago. His central thesis: we’ve evolved from Software 1.0 (traditional code) through Software 2.0 (neural networks) to Software 3.0 (large language models), where programming now happens in natural English rather than formal programming languages.

All about Software 3.0

The concept of Software 3.0 represents a revolutionary paradigm shift in how we create and interact with software, where programming involves “prompt engineering” to design a well-formed natural language prompt to query the model to get the desired output. This concept has gained significant attention recently, particularly after Andrej Karpathy’s presentation at Y Combinator’s AI Startup School in June 2025.

The Evolution: From 1.0 to 3.0

Software 1.0 is traditional programming where programming involves writing every line of code to achieve a desired behavior in languages like Python, C++, and Java.

Software 2.0, coined by Andrej Karpathy in 2017, refers to the machine learning model paradigm for software where the code usually consists of a dataset that defines the desirable behavior and a neural net architecture that gives the rough skeleton of the code, but with many details (the weights) to be filled in.

Software 3.0 takes this further – programming an LLM with natural language prompts where a desirable program is obtained by querying a large AI model that can accomplish a broad range of tasks.

Key Characteristics

The defining feature of Software 3.0 is that it understands prompts in natural language—English, in many cases—and crafts responses and actions in a context that mimics human comprehension. As Karpathy noted in his 2025 presentation, “Perhaps GitHub code is no longer just code, but a new category of code that mixes code and English is expanding”.

This paradigm represents the democratization of programming. The barrier to entry for software creation drops precipitously when you can instruct a machine in plain English, drastically expanding who can participate in building digital solutions.

Current Applications and Tools

Software 3.0 is already being implemented through various AI-powered development tools:

- Code generation platforms like GitHub Copilot, Cursor, and Replit Agent

- “Vibe coding” – a term popularized by Karpathy referring to the practice of writing code, making web pages, or creating apps, by just telling an AI program what you want, and letting it create the product for you

- No-code/low-code platforms that leverage LLMs for application development

Notably, Y Combinator reported that 25% of startup companies in its Winter 2025 batch had codebases that were 95% AI-generated, demonstrating the rapid adoption of this approach.

The Infrastructure Analogy

Karpathy draws compelling parallels between LLMs and fundamental infrastructure. He quoted Andrew Ng: “AI is the new electricity.” This quote underscores how LLMs are becoming a fundamental resource, much like electricity, and transforming how we interact with technology.

LLMs have characteristics of:

- Utilities: LLMs are now provided as services where you pay based on the amount of usage (e.g., cost per million tokens), similar to how electricity is billed based on consumption

- Fabrication plants: The cost of building and training these models is huge

- Operating systems: LLMs are becoming complex software ecosystems, the “core” of modern applications

Current Limitations and Challenges

Despite its promise, Software 3.0 faces several challenges:

- “Jagged Intelligence”: state of the art LLMs can both perform extremely impressive tasks (e.g. solve complex math problems) while simultaneously struggle with some very dumb problems

- “Anterograde Amnesia”: LLMs are a bit like a coworker with Anterograde amnesia – they don’t consolidate or build long-running knowledge or expertise once training is over and all they have is short-term memory (context window)

- Quality concerns: A key part of the definition of vibe coding is that the user accepts code without full understanding, which raises questions about code quality and maintainability.

Video about Software 3.0

Summary of the Speak:

The Evolution of Software Paradigms

Karpathy presents a fascinating framework dividing software development into three distinct eras:

- Software 1.0: Traditional computer code written by humans

- Software 2.0: Neural network weights optimized through data and algorithms (exemplified by his work at Tesla’s Autopilot)

- Software 3.0: Large Language Models programmable through natural language prompts

The speaker emphasizes how each paradigm “eats through” the previous one’s stack, just as neural networks gradually replaced traditional code in Tesla’s autonomous driving system. This progression represents more than incremental improvement—it’s a fundamental shift in how we interact with and program computers.

LLMs as the New Operating System

One of Karpathy’s most compelling analogies positions LLMs as operating systems rather than simple utilities. He draws parallels between:

- Utility aspects: Metered access, uptime demands, and the concept of “intelligence brownouts” when models go down

- Fab characteristics: High capital expenditure requirements and centralized development

- OS similarities: Complex software ecosystems with closed-source providers (OpenAI, Anthropic) and open-source alternatives (Llama ecosystem)

He argues we’re currently in the “1960s era” of this new computing paradigm, where LLM compute remains expensive and centralized, forcing time-sharing arrangements similar to early mainframe computers.

The Psychology and Limitations of AI

Karpathy provides a nuanced view of LLMs as “stochastic simulations of people” with both superhuman capabilities and significant cognitive deficits:

Strengths:

- Encyclopedic knowledge and memory (likened to the autistic savant in “Rain Man”)

- Ability to process vast amounts of information

Limitations:

- Hallucination and fabrication of information

- “Jagged intelligence” causing superhuman performance in some areas while making basic errors

- Anterograde amnesia – inability to learn and consolidate knowledge over time

- Security vulnerabilities including prompt injection and data leakage

Partial Autonomy: The Path Forward

Rather than advocating for fully autonomous AI agents, Karpathy champions “partial autonomy” applications. Using Cursor (coding assistant) and Perplexity (search tool) as examples, he outlines key features of successful LLM applications:

- Context management by the LLM

- Multi-model orchestration behind the scenes

- Application-specific GUI for human oversight

- Autonomy slider allowing users to control the level of AI involvement

The speaker draws from his autonomous driving experience at Tesla, noting that even after 12 years of development, full self-driving remains elusive, suggesting AI agents will require similar long-term development and human oversight.

“Vibe Coding” and Democratized Programming

Karpathy introduces the concept of “vibe coding” – programming through natural language that makes everyone a potential programmer. He shares personal examples of building iOS apps and web applications despite lacking formal knowledge of Swift or web development frameworks. This democratization represents an unprecedented shift where programming expertise is no longer gated by years of formal study.

However, he notes that while the coding itself became accessible, deployment, authentication, payments, and other “real-world” infrastructure remain complex and human-intensive.

Building for AI Agents

The final section explores preparing digital infrastructure for AI consumption:

- LLM-friendly documentation in markdown format rather than visual interfaces

- Direct AI communication through files like “llm.txt” (similar to robots.txt)

- API-first approaches replacing “click here” instructions with programmatic commands

- Tools for content ingestion that transform human interfaces into AI-readable formats

Companies like Vercel and Stripe are pioneering documentation specifically designed for LLM consumption, replacing visual instructions with programmatic alternatives.

Software 3.0 may impact to Southeast Asia

How is Software 3.0 impacting Southeast Asia? Software 3.0 is having a profound and transformative impact on Southeast Asia, positioning the region as a major global AI hub while creating both unprecedented opportunities and significant challenges.

Economic Impact and Growth Projections

The economic potential is staggering. By 2030, AI adoption could improve the region’s total gross domestic product (GDP) by between 13 and 18 percent, a value nearing US$1 trillion. More specifically, Kearney Analysis predicted that AI would contribute 10 to 18% to the region’s GDP by 2030, with countries like Singapore, Malaysia, and Indonesia, with more advanced economies, are predicted to utilize AI to integrate modernization within their borders further.

Southeast Asia’s digital economy is particularly well-positioned for this transformation. As one of the world’s fastest-growing internet markets, Southeast Asia’s digital economy is projected to nearly triply by 2030, expanding from $300 billion to close to $1 trillion.

Rapid AI Adoption and Developer Ecosystem Changes

The region is demonstrating remarkable speed in AI adoption. Most organizations can move from an initial idea to production within six months. Impressively, 7 out of 10 organizations in Southeast Asia report a positive return on investment (ROI) attributable to GenAI workflows within 12 months of implementation.

For developers specifically, around 40% of developers now use AI-powered tools like GitHub Copilot to accelerate coding, streamline debugging, and enhance code quality. The region is seeing significant growth in its developer workforce, with Asia leads in total developers with over 6.5 million globally.

Investment Surge and Infrastructure Development

Foreign investment is pouring into the region’s AI infrastructure. In the first half of 2024 alone, more than US$30 billion has been committed to building AI-ready data centres across Singapore, Thailand, and Malaysia. Major tech companies are making substantial commitments: Amazon has pledged $9 billion to increase infrastructure in Singapore. Microsoft has invested billions in AI data center infrastructure across the region, including Indonesia, Malaysia, and Thailand.

Southeast Asia is attracting record levels of foreign direct investment (FDI), even as global FDI declines. As Courtney Fingar highlights, the region secured $235 billion in FDI in 2024—outpacing China.

Country-Specific Developments

Singapore leads the region with 25 governance initiatives, including the National AI Strategy and Model AI Governance Framework and is positioning itself as an AI governance hub.

Indonesia shows the highest adoption rates, with Indonesia had the highest AI adoption rate in Southeast Asia at 24.6 percent, followed by Thailand (17.1 percent), Singapore (9.9 percent), and Malaysia (8.1 percent).

Malaysia is scaling its semiconductor ambitions with its National Semiconductor Strategy and has ambitions to be a top 20 global AI player.

Local Language Model Development

The region is actively developing localized AI models to address linguistic diversity. SEA-LION, a product of AI Singapore, which is itself a “multi-party effort between various economic agencies and academia” is but a first step in a series of continuing efforts to build more sophisticated models in the coming years. AI Singapore stated that SEA-LION’s training data comprised 13 percent Southeast Asian–language content, 64 percent English-language content, and the remainder Chinese-language content and code.

Additionally, there are regional initiatives like the Pan-SEA AI Developer Challenge 2025 which aims to empower AI developers across Southeast Asia to build LLM-powered solutions that matter in your local context, with the chance to scale across the region.

Challenges and Concerns

Despite the opportunities, the region faces significant challenges:

Talent Shortage: Southeast Asia also faces challenges in AI development, including talent shortages, digital infrastructure gaps, and the need for robust regulatory frameworks.

Employment Concerns: There is also a growing unease about the potential impact on employment, especially for low-skilled workers. Many workers are worried about their jobs being automated, especially in countries like Indonesia and the Philippines.

Governance Gaps: ASEAN has not been able to devise a regional governance framework to address relevant existing and future challenges, and none have issued binding regulations on AI use and development.

Skills Development and Education

The region is investing heavily in AI literacy and skills development. Initiatives like the recent $5 million grant from Google.org to the ASEAN Foundation for AI literacy development can play a role in this shared agenda. The focus is on integrating AI literacy into national skills frameworks will build a pipeline of data scientists, machine learning engineers, and AI professionals.

Geopolitical Positioning

Southeast Asia is becoming strategically important in the global AI landscape. Chinese companies have released a slew of foundational AI models that demonstrate the country’s research progress and according to a 2023 report, China made the highest investments in Southeast Asian AI startups between 2011 and 2021. Meanwhile, the US risks falling behind. Unlike China, which joins Southeast Asian nations in the Regional Comprehensive Economic Partnership (RCEP), and US allies like Japan and Australia, which join many Southeast Asian nations in the Comprehensive and Progressive Agreement for Trans-Pacific Partnership (CPTPP), the US is on the outside looking in.

Future Implications

Karpathy emphasizes that “we all have the potential to make these decisions and actually move fluidly between these paradigms” – suggesting that effective developers will need to understand when to use Software 1.0, 2.0, or 3.0 approaches.

The emergence of Software 3.0 represents Software 3.0 is eating Software 1/2, fundamentally changing how we think about software development from writing code to orchestrating AI systems through natural language interaction.

This paradigm shift is happening right now, with a lot of 1.0 & 2.0 code is being replaced by 3.0 code, making it one of the most significant changes in software development since the advent of programming itself.

Benefit for Southeast Asia

Southeast Asia is uniquely positioned to benefit from Software 3.0. By fostering a thriving AI ecosystem, Southeast Asia is not only poised to unlock significant economic growth but to surpass developed economies in GDP growth. The region’s young demographic that may significantly benefit from skilling themselves in AI use and its rapid digital adoption make it an ideal testing ground for AI-powered applications.

Bottom Line: Software 3.0 is transforming Southeast Asia into a major AI powerhouse, with the potential to add nearly $1 trillion to regional GDP by 2030. While challenges around talent, governance, and employment displacement remain, the region’s strategic investments, rapid adoption rates, and young tech-savvy population position it to become a leading force in the global AI economy. For developers and businesses in the region, this represents a once-in-a-generation opportunity to participate in and shape the future of software development.

Conclusion and Key Takeaways

We are experiencing a once-in-a-generation shift in software development that creates enormous opportunities for new developers entering the field.

Essential Takeaways:

- Multi-paradigm fluency is crucial – Future developers must be comfortable with traditional coding, neural networks, and natural language programming

- Focus on partial autonomy over full automation – Build “Iron Man suits” (augmentation tools) rather than “Iron Man robots” (fully autonomous agents)

- Human-AI collaboration is key – Success depends on optimizing the generation-verification loop between humans and AI

- Infrastructure must evolve – Software and documentation need to become AI-readable while maintaining human usability

- Democratization creates opportunity – Natural language programming opens software development to unprecedented numbers of people

- Patient development approach – Like autonomous driving, AI agents will require years of careful development with humans in the loop

Final Insight: Karpathy positions this as “the decade of agents” rather than “the year of agents,” emphasizing the need for sustained, thoughtful development rather than rushed deployment of autonomous systems.

Related References

- Tesla Autopilot development – Real-world example of Software 2.0 replacing traditional code

- Three Blue One Brown’s Manim library – Example of LLM-accessible documentation

- Model Context Protocol (Anthropic) – Standard for AI-software communication

- Vercel and Stripe documentation – Early examples of AI-optimized technical documentation