Introduction:

In the ever-evolving world of artificial intelligence, ChatGPT has emerged as a groundbreaking application that has gained immense popularity within a short span of time. Released in November 2022, it achieved the remarkable feat of reaching 100 million monthly active users in just two months, surpassing the growth rate of any other app in history. In this video, we will explore the technical workings of ChatGPT, delving into the architecture and understanding the underlying principles that make it function seamlessly. Check out the video below.

Key Points:

- The Power of Large Language Models:

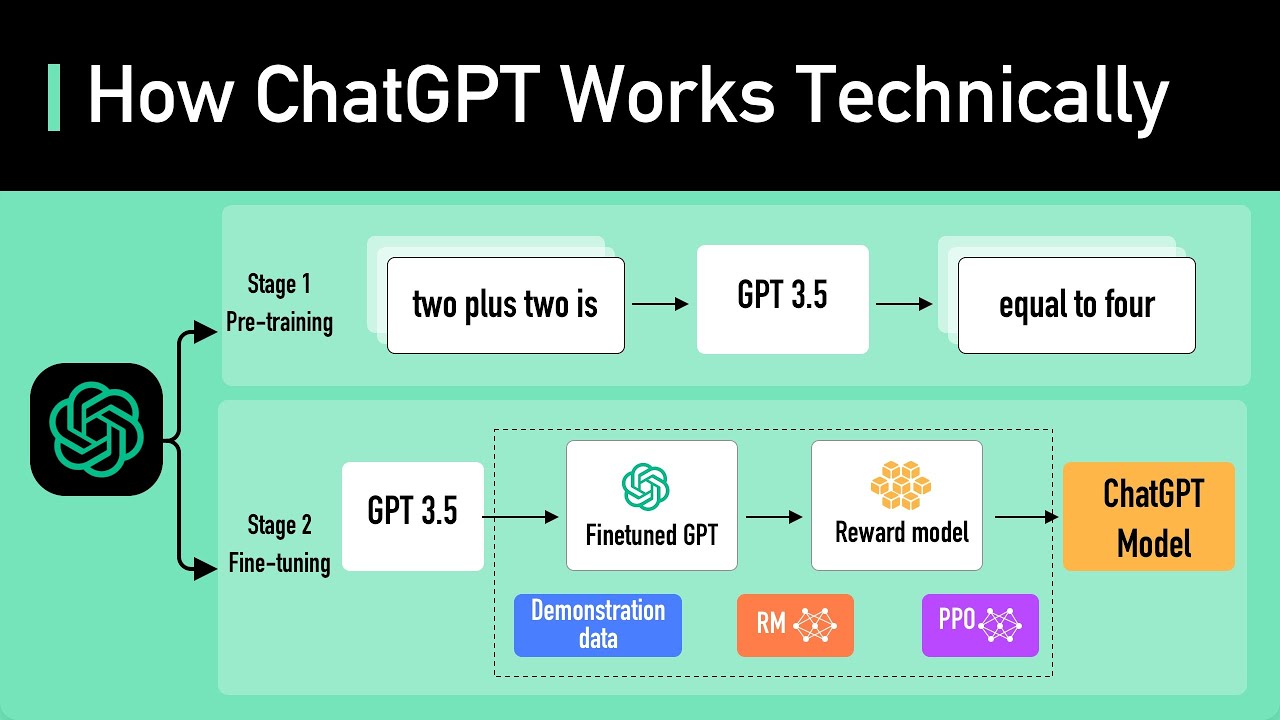

At the core of ChatGPT lies a Large Language Model (LLM), specifically GPT-3.5, which has an impressive capacity to understand and generate human language. LLMs are neural network-based models trained on extensive amounts of text data, enabling them to learn statistical patterns and word relationships. With 175 billion parameters distributed across 96 layers, GPT-3.5 stands as one of the largest deep learning models ever created. - Training and Tokenization:

GPT-3.5 was trained on a vast dataset comprising 500 billion tokens, representing billions of words from the internet. Tokenization, the process of converting words into numerical representations, facilitates efficient processing. The model was trained to predict the next token based on a sequence of input tokens, allowing it to generate text that is grammatically correct and semantically similar to its training data. - Fine-Tuning for Safety and Chatbot Capabilities:

To make ChatGPT safer and enhance its conversational abilities, the model undergoes a process called Reinforcement Learning from Human Feedback (RLHF). Analogous to refining a highly skilled chef’s culinary prowess, RLHF involves collecting feedback from real users, creating a reward model based on their preferences, and using Proximal Policy Optimization (PPO) to iteratively improve the model’s performance. This fine-tuning enables ChatGPT to generate more accurate and tailored responses. - Context Awareness and Prompt Engineering:

To ensure context-awareness within chat conversations, ChatGPT utilizes conversational prompt injection, where the entire past conversation is provided as input along with the new prompt. Primary prompt engineering involves injecting invisible instructions before and after the user’s prompt to guide the model’s conversational tone. These techniques enable ChatGPT to understand and respond appropriately within the given context. - Moderation and Safety Measures:

Considering the potential for generating unsafe or harmful content, ChatGPT employs a moderation API that warns or blocks certain types of content. Both the user’s input and the generated response are subject to moderation, ensuring a safer user experience and preventing the dissemination of harmful information.

Conclusion:

ChatGPT has revolutionized the field of natural language processing with its powerful LLM architecture and sophisticated techniques such as RLHF and prompt engineering. As we continue to explore the boundaries of AI, ChatGPT stands as a testament to the remarkable progress achieved in the domain of conversational AI. By constantly evolving and integrating safety measures, ChatGPT offers an exciting glimpse into the future of human-computer interaction, reshaping the way we communicate and engage with AI-powered systems.

Your blog is a true source of inspiration.

Thank you.. We will continue to post about AI, AI mindset and Agriculture info…