Introduction

In a historic announcement this March 2025, Google DeepMind revealed two groundbreaking artificial intelligence models that promise to reshape the landscape of robotics: Gemini Robotics and Gemini Robotics ER. These sophisticated models represent a monumental advancement in the field of robotics, providing robots with unprecedented capabilities to perceive and interpret their surrounding environment with remarkable accuracy, process and respond to natural language commands in real-time, and execute complex physical tasks with precision. Leveraging the robust foundation established by Gemini 2.0, these cutting-edge models successfully bridge the long-standing divide between theoretical AI capabilities in the digital realm and practical robotic applications in the physical world. This breakthrough development holds immense potential to revolutionize automation across a wide spectrum of industries and applications, from manufacturing and healthcare to logistics and domestic assistance, marking what many experts consider a pivotal moment in the evolution of robotic technology.

Video about Gemini Robotics and Gemini Robotics ER:

Key Aspects of Gemini Robotics

Vision Language Action (VLA) Model

Gemini Robotics functions as a vision language action model that sees its environment through camera feeds, understands natural language instructions, and produces action plans to control robots. Unlike traditional industrial robots that require extensive programming for specific tasks, Gemini Robotics can handle new scenarios it wasn’t explicitly trained on, showing remarkable generalization capabilities that more than doubled the performance of previous state-of-the-art models.

Adaptability and Interactivity

The model continuously monitors its environment and can adapt on the fly to changes, such as objects being moved or unexpected obstacles. This real-time replanning ability makes it particularly valuable in dynamic, unpredictable environments. Additionally, its advanced language understanding allows it to process casual commands in everyday language, including complex instructions with qualifiers or exceptions.

Dexterity and Fine Motor Skills

One of the most impressive aspects of Gemini Robotics is its ability to perform tasks requiring fine motor skills. Google DeepMind demonstrated the model controlling robotic arms to fold origami, pack items in bags, and handle fragile objects with precision – tasks that traditionally challenge robotic systems.

Embodied Reasoning (ER)

Gemini Robotics ER, the sister model, specializes in advanced spatial understanding and embodied reasoning. It excels at figuring out how objects exist in physical space, planning optimal grasping strategies, and calculating safe trajectories for robotic movements. In end-to-end testing, Gemini Robotics ER achieved success rates 2-3 times higher than baseline Gemini 2.0 in tasks involving perception, state estimation, spatial understanding, and motion planning.

Industry Partnerships and Implementation

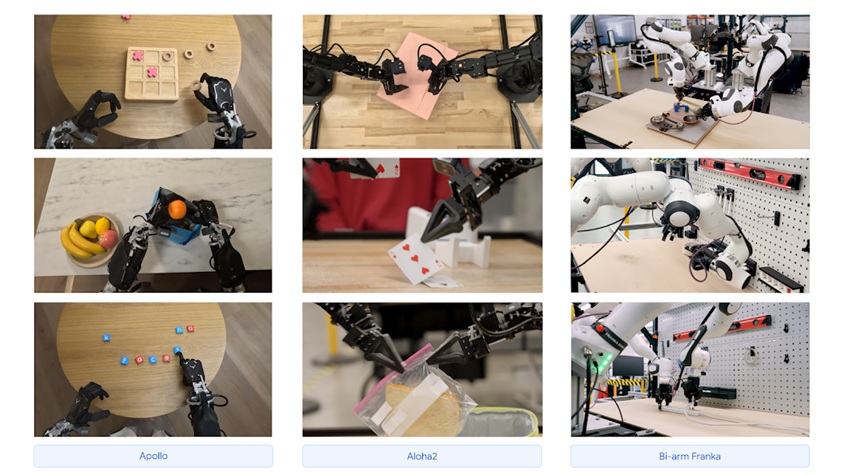

Google has partnered with several robotics companies to test and implement these models, including a significant investment in Apptronik, which specializes in humanoid robots through their Apollo platform. Other collaborators include Agile Robots, Agility Robots, Boston Dynamics, and Enchanted Tools, suggesting we may soon see these models powering various robot types across multiple environments.

Safety and Ethics Framework

Google has introduced the “Azimov dataset,” named after Isaac Asimov’s three laws of robotics, to measure and improve semantic safety in embodied AI. They’ve also developed a framework for generating “robot constitutions” – sets of natural language rules that guide robots away from unsafe or unethical tasks. This development is overseen by Google’s Responsibility and Safety Council, which works with external experts to address societal implications of advanced embodied AI.

Conclusion

5 Key Takeaways:

- Gemini Robotics and Gemini Robotics ER represent a significant leap in robot intelligence, enabling natural language interaction and adaptive physical action.

- The models demonstrate unprecedented generalization capabilities, allowing robots to handle new objects and scenarios without specific training.

- Fine motor skills and dexterity have been dramatically improved, allowing robots to perform delicate tasks previously beyond their capabilities.

- Google is extensively partnering with industry leaders like Apptronik, Boston Dynamics, and others to implement these models across various robot platforms.

- Safety and ethical considerations are being addressed through the Azimov dataset and “robot constitutions” that guide AI behavior in alignment with human values.

References:

- Google DeepMind’s March 2025 announcement of Gemini Robotics

- Demonstrations of robots performing tasks like origami folding and object manipulation

- Apptronik’s Apollo robot platform and Google’s investment ($350 million funding round)

- Testing partnerships with Agile Robots, Agility Robots, Boston Dynamics, and Enchanted Tools

- The Azimov dataset for semantic safety in embodied AI and robotics