Introduction:

Welcome to this special presentation on the intricacies of designing and constructing efficient artificial intelligence (AI) data centers. This video dives deep into server hardware configurations and the optimization of AI accelerator cards for large-scale model training. As we explore this topic, we’ll address the challenge of ensuring efficient data transfer and communication within servers and clusters, essential for AI data centers running thousands of servers simultaneously.

High-Performance Interconnects for GPUs: InfiniBand, RoCE, iWARP, and NVLink:

These technologies all address the challenge of connecting multiple Graphics Processing Units (GPUs) in a computer system for faster processing and data exchange. Here’s a breakdown of each:

1. InfiniBand (IB):

- Type: High-performance networking fabric specifically designed for data centers and HPC environments.

- Focus: Low latency and high bandwidth for communication between various components in a system, including CPUs, GPUs, and storage.

- Implementation: Uses dedicated hardware (adapters) and switches to create a separate network for high-performance data transfers.

Benefits:

- Extremely low latency, ideal for real-time data exchange between GPUs.

- High bandwidth, suitable for large data transfers required in HPC and AI workloads.

- Scalable architecture, supporting connections for a large number of devices.

Drawbacks:

- Requires dedicated hardware, making it more expensive than other options.

- Increased complexity due to separate network management.

2. RDMA over Converged Ethernet (RoCE):

- Type: Protocol that leverages standard Ethernet networks with special hardware to enable Remote Direct Memory Access (RDMA).

- Focus: Enables efficient data transfers between applications on different systems without involving the CPU for every step.

- Implementation: Requires network cards (NICs) with RoCE support to offload data movement tasks.

Benefits:

- Utilizes existing Ethernet infrastructure, potentially reducing cost compared to InfiniBand.

- Offers lower latency and higher bandwidth compared to standard TCP/IP networking.

Drawbacks:

- Performance can be limited by the underlying Ethernet network infrastructure.

- Requires compatible hardware support (RoCE-enabled NICs).

3. iWARP (RDMA over Converged Ethernet)

- Type: Similar to RoCE, another RDMA protocol over Ethernet.

- Focus: Originally developed by Microsoft, iWARP aimed to be an open-source alternative to RoCE.

- Implementation: Similar to RoCE, requiring iWARP-enabled network cards.

Current Status:

- iWARP has largely been superseded by RoCE, which has gained wider industry adoption.

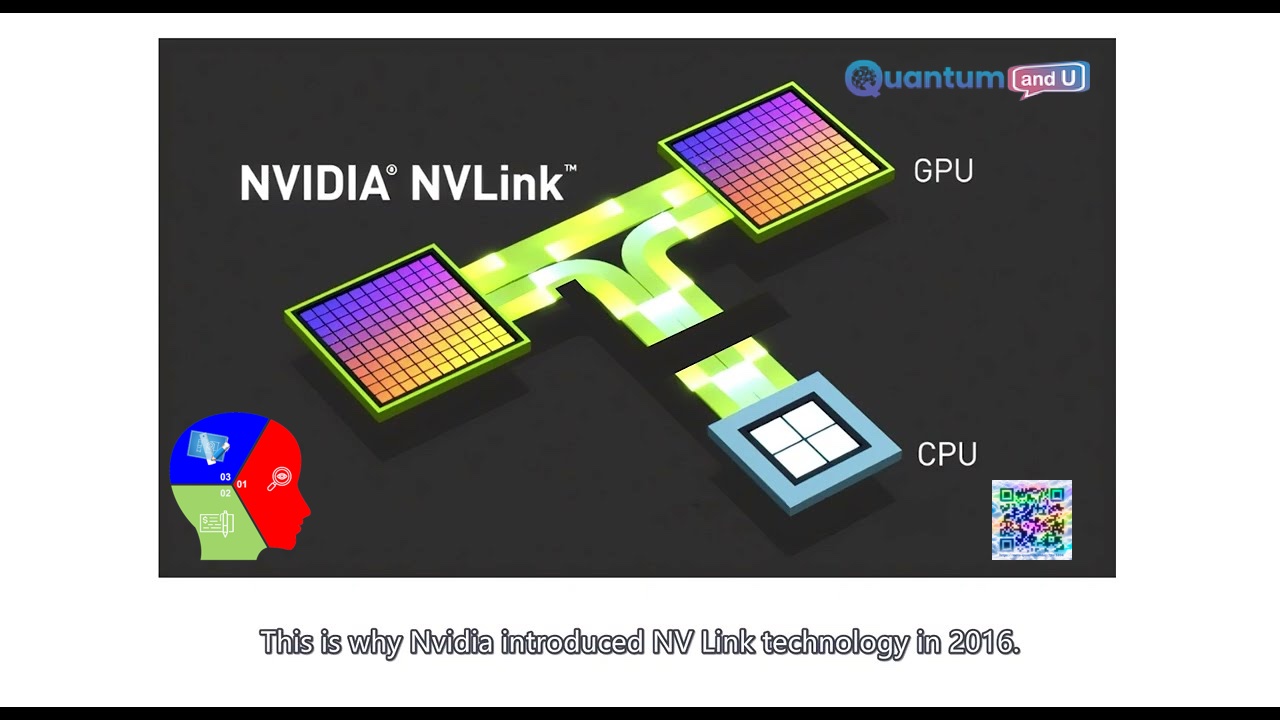

4. NVLink:

- Type: Proprietary high-speed interconnect technology developed by Nvidia.

- Focus: Designed specifically for high-bandwidth, low-latency connections between Nvidia GPUs and other components within a single system.

- Implementation: Requires Nvidia GPUs and compatible motherboards with NVLink connectors.

Benefits:

- Exceptionally high bandwidth and ultra-low latency, ideal for tightly coupled workloads in HPC and AI.

- Tight integration with Nvidia GPUs and software for optimized performance.

- Can be used with NVSwitch for high-speed fabric switching in multi-GPU setups.

Drawbacks:

- Proprietary technology, limited to Nvidia systems, not compatible with other brands.

Choosing the Right Option:

The best choice depends on your specific needs and priorities:

- For the highest performance and lowest latency within an Nvidia system: NVLink is the clear winner.

- For high-performance data center deployments with diverse hardware: InfiniBand offers the best combination of low latency and scalability.

- For a balance between cost and performance using existing Ethernet infrastructure: RoCE is a good option, but ensure compatible hardware support.

- Future-proofing and open standards: Keep an eye on UALink (Ultra Accelerator Link) development, aiming to be an open-standard alternative for high-performance GPU communication.

Want to know more about NVLink and UALink, Click here!

Vidoe about InfiniBand, RoCE and NVLink:

Related Sections of Above Video:

- PCIe Limitations and NVLink Technology

- The video starts by explaining the limitations of PCIe interfaces in AI data centers. Despite PCIe Gen 5×16 offering a maximum of 128 GB/s bidirectional bandwidth, it pales compared to the memory bandwidth of AI accelerator cards, which can reach up to 5 TB/s.

- NVLink technology, introduced by NVIDIA in 2016, addresses these limitations by providing higher transfer speeds and lower latency. NVLink offers up to 600 GB/s for A100 and 900 GB/s for H100, significantly surpassing PCIe bandwidth.

- NVLink’s direct point-to-point connections enable efficient data transfer without involving host memory or CPUs, crucial for AI applications. This technology also supports memory sharing among GPUs, further enhancing data processing efficiency.

- Interconnect Design and RDMA Technology

- The video discusses the importance of efficient interconnect design among clusters in distributed training. Traditional TCP/IP communication introduces high latency due to multiple data copies and kernel involvement.

- Remote Direct Memory Access (RDMA) technology offers a solution by allowing direct data transfer between nodes without kernel involvement, significantly reducing CPU load and enhancing data transfer rates.

- RDMA supports operations like send/receive, read/write, and direct memory transfer between virtual memories of applications on different nodes, leading to substantial efficiency improvements in AI data centers.

- RDMA Implementations: InfiniBand, RoCE, and iWARP

- Three main RDMA implementations are covered: InfiniBand (IB), RoCE (RDMA over Converged Ethernet), and iWARP.

- InfiniBand excels in high-performance computing with excellent performance, low latency, and scalability but requires significant infrastructure changes and investment.

- RoCE offers a more cost-effective alternative by enabling RDMA over existing Ethernet infrastructure, reducing the need for new equipment and facilitating easier integration.

- Emerging protocols like the Ultra Ethernet Consortium (UEC) aim to provide even better performance and efficiency for AI workloads, representing continuous technological development and innovation.

The Rise of Data Centers in Southeast Asia: Impact of High-Performance Interconnects

The booming digital economy in Southeast Asia is driving a surge in demand for data centers. This creates significant opportunities, but also presents challenges when it comes to high-performance computing (HPC) and Artificial Intelligence (AI) applications. Here’s how InfiniBand, RoCE, iWARP, and NVLink will impact Southeast Asia’s data center landscape:

Opportunities:

- Increased Demand for High-Performance Interconnects: As Southeast Asia embraces HPC and AI, the need for faster communication between GPUs will rise. This creates a market for InfiniBand, RoCE, and potentially UALink in the future.

- Data Center Specialization: Data centers can specialize in catering to specific needs. Facilities with high-performance interconnects can attract clients requiring HPC and AI capabilities.

- Infrastructure Upgrade Cycle: The adoption of these technologies might trigger an upgrade cycle for data center infrastructure, leading to new investments.

- Enhanced Regional Competitiveness: Robust data center infrastructure with high-performance interconnects can make Southeast Asia a more attractive destination for international cloud service providers and tech companies.

Challenges:

- Cost Considerations: InfiniBand offers the best performance but comes at a premium. RoCE offers a more cost-effective option but may not meet the demands of all HPC applications.

- Technical Expertise: Implementing and managing high-performance interconnects requires skilled personnel. A talent gap in this area could hinder adoption.

- Standardization: With UALink still under development, the current landscape lacks a single open standard. This might create compatibility issues for some data centers.

Overall, the rise of high-performance interconnects presents a mixed bag for Southeast Asia’s data centers.

How data centers can capitalize on the opportunities:

- Investing in the Right Technology: Carefully evaluate the needs of their target clientele and choose the most suitable interconnect technology (InfiniBand, RoCE, or waiting for UALink).

- Developing Expertise: Investing in training programs to equip staff with the necessary skills to manage and maintain these complex technologies.

- Collaboration: Data center operators can collaborate with universities and tech companies to foster a skilled workforce in this domain.

Conclusion:

This video thoroughly explores the complexities of AI data center network design, highlighting the limitations of traditional PCIe interfaces and the advancements brought by NVLink and RDMA technologies. Key takeaways include:

Each technology offers unique advantages and drawbacks. Understanding their strengths and weaknesses will help you choose the most suitable option for connecting your GPUs and achieving optimal performance for your specific workloads.

By embracing these technologies and overcoming the challenges, Southeast Asia’s data centers can position themselves as key players in the global digital landscape.

Key takeaways:

- NVLink significantly enhances data transfer speeds and efficiency compared to PCIe.

- RDMA technology reduces latency and CPU load, crucial for distributed AI training.

- InfiniBand offers high performance but at a higher cost, while RoCE provides a cost-effective alternative leveraging existing Ethernet infrastructure.

- Emerging protocols like UEC represent the future of AI data center networking, promising further advancements.

Related References:

- NVIDIA NVLink technology

- Remote Direct Memory Access (RDMA)

- InfiniBand and RoCE protocols

- Ultra Ethernet Consortium (UEC)

Special Note to GPUs Link

About NVLink and UALink:

NVLink and UALink are both technologies designed to connect multiple GPUs (Graphics Processing Units) in a computer system, but they differ in their approach and target audience.

NVLink:

- Developed by: Nvidia

- Type: Proprietary high-speed interconnect

- Current Status: Established technology, been around since 2016

- Focus: High-performance connections within a single system, ideal for tightly coupled workloads in HPC (High-Performance Computing) and AI (Artificial Intelligence)

- Benefits:

- Blazing-fast data transfer for scaling GPU clusters

- Ultra-low latency for real-time data sharing between GPUs

- Integration with NVSwitch for high-speed switching in multi-GPU setups

- Drawbacks:

- Limited to Nvidia systems, not compatible with other brands

- Benefits:

UALink (Ultra Accelerator Link):

- Developed by: Consortium including AMD, Intel, Broadcom, and others (excluding Nvidia)

- Type: Open-standard interconnect (targeted)

- Current Status: Under development, first version expected around 2026

- Focus: Open standard for high-performance GPU communication in data centers, targeting connections between large numbers of GPUs

- Benefits:

- Open standard allows wider adoption and potentially lower costs

- Aims for high scalability for large-scale deployments

- Drawbacks:

- New technology, performance and features compared to NVLink yet to be proven

- May take longer adoption due to being a new standard

- Benefits:

Here’s a table summarizing the key points:

| Feature | NVLink | UALink |

|---|---|---|

| Developer | Nvidia | Consortium (AMD, Intel, Broadcom, etc.) |

| Type | Proprietary high-speed interconnect | Open-standard interconnect (targeted) |

| Status | Established technology | Under development (expected 2026) |

| Focus | Tightly coupled workloads (HPC, AI) | Large-scale GPU communication (data centers) |

| Benefits | High bandwidth, low latency, NVSwitch | Open standard, high scalability |

| Drawbacks | Limited to Nvidia systems | New technology, unproven performance |

Choosing Between Them:

- If you need the highest performance and lowest latency for tasks like scientific computing or AI within an Nvidia system, NVLink is the better option today (2024).

- If open standards, future scalability for large deployments, and potentially wider compatibility across vendors are important, UALink might be a good choice once it’s available.

The development of UALink could bring more competition and innovation to the market, potentially benefiting users with a wider range of choices and potentially lower costs in the long run.