Introduction:

In this YouTube review, we delve into the groundbreaking advancements in text-to-video technology, particularly focusing on recent developments such as the introduction of SORA and Gemini 1.5 Pro, which offer capabilities to generate videos from text prompts. However, the crux of these innovations is traced back to a seminal event on February 13, which set the stage for these advancements.

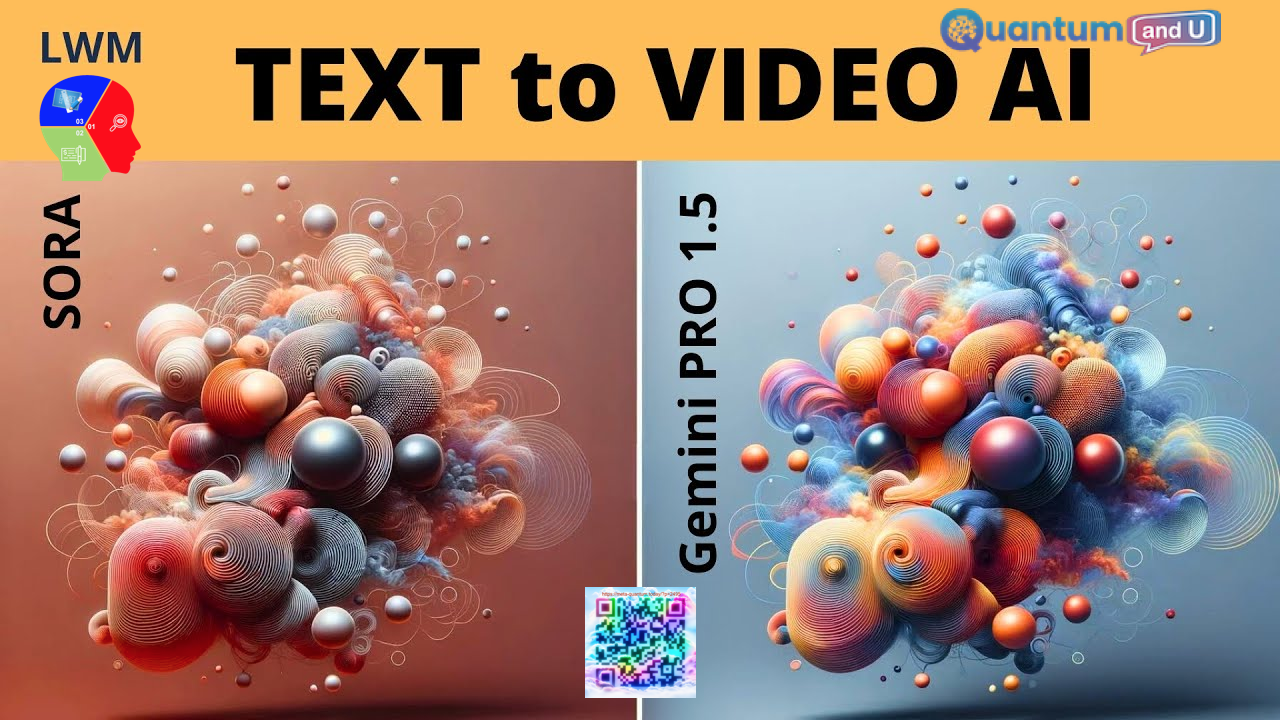

Text-to-VIDEO 1Mio Token: SORA vs Gemini PRO 1.5

Both SORA and Gemini PRO 1.5 are large language models (LLMs) capable of generating text-to-video content, but they have some key differences to consider:

SORA:

- Open-source: The code behind SORA is publicly available, allowing for community development and contribution.

- Focus on text-to-animation: SORA specializes in generating basic animations from text descriptions.

- 1Mio Token: This refers to the architecture of SORA, which uses 1 million parameters. This makes it smaller and less computationally intensive compared to Gemini PRO 1.5.exclamation

- Limited features: SORA’s capabilities are currently limited to basic animations and may lack the advanced features and realism of Gemini PRO 1.5.

Gemini PRO 1.5:

- Closed-source: The code behind Gemini PRO 1.5 is not publicly available.exclamation

- Focus on text-to-realistic video: Gemini PRO 1.5 aims to generate realistic videos from text descriptions.

- Larger model: Gemini PRO 1.5 uses a more complex architecture with significantly more parameters than SORA, leading to potentially more detailed and realistic outputs.

- Wider range of features: Gemini PRO 1.5 may offer more advanced features like object recognition, scene understanding, and video editing capabilities.

- Limited access: Currently, access to Gemini PRO 1.5 is restricted to a limited number of users through a waitlist.

Choosing between the two:

The best choice for you depends on your specific needs and priorities:

- For open-source development and basic text-to-animation: SORA could be a good option.

- For generating realistic videos with advanced features: Gemini PRO 1.5 might be a better choice, but keep in mind the limited access and potentially higher computational cost.

Video about Text to Video AI:

Related Sections about video:

- Innovative Research by UC Berkeley:

The review begins by elucidating the foundational research conducted by UC Berkeley, introducing the Blockwise Parallel Transformer architecture. This architecture addresses the quadratic complexity of Transformer models by integrating blockwise parallelization for both attention and feed-forward networks. - Introduction of Ring Attention:

UC Berkeley further enhanced their framework with Ring Attention, a novel approach that enables parallelization across multiple hosts, thereby overcoming memory constraints and facilitating processing of longer input sequences. - Achievements and Impact:

Through extensive experiments, UC Berkeley demonstrated the effectiveness of their model, enabling training sequences significantly longer than previous memory-efficient Transformers. The model’s capabilities extend to processing sequences of up to 100 million tokens without compromising attention mechanisms. - Open-Source Initiative:

UC Berkeley’s contributions extend beyond research, as they provide an open-source implementation of their model. This initiative includes pre-trained and fine-tuned models, empowering users with access to a family of 7 billion trainable parameter models capable of handling multimodal sequences. - Text-to-Video Generation:

A significant highlight is UC Berkeley’s text-to-video generation, wherein a textual prompt can generate a short video—an innovation that predates subsequent announcements by major tech companies like OpenAI and Google. - Comparison with Gemini 1.5 Pro:

The review briefly discusses Gemini 1.5 Pro, highlighting its impressive computational resources and inclusion of audio training capabilities. However, it emphasizes UC Berkeley’s pioneering role in text-to-video generation and multimodal processing.

Impact of Text-to-Video on SEA and Market Opportunities:

The emergence of text-to-video technology has the potential to significantly impact Southeast Asia and present several market opportunities. Here’s a breakdown of both:

Impact:

a. Educational Content Creation:

- Language Diversity: Text-to-video can overcome language barriers by generating videos in various Southeast Asian languages, making educational content more accessible. This can be especially beneficial in regions with diverse linguistic communities.

- Personalized Learning: AI-powered video generation can personalize learning experiences by tailoring content to individual needs and learning styles. This can be particularly impactful in areas with limited access to qualified teachers.

b. Marketing and Advertising:

- Culturally Relevant Content: Text-to-video allows brands to create culturally relevant marketing materials for specific Southeast Asian markets, considering local customs and preferences.

- Increased Engagement: Engaging video content can attract more customers and increase brand awareness, especially among younger generations accustomed to consuming visual media.

c. Media and Entertainment:

- Content Creation Democratization: Text-to-video tools empower individuals to create animated content without needing animation expertise, fostering local content creation and expression.

- Preserving Languages and Cultures: The technology can be used to document and preserve endangered languages and cultural traditions through engaging video narratives.

d. Business Communication:

- Enhanced Communication: Text-to-video can be used to create explainer videos, product demonstrations, and training materials, improving communication within and across businesses.

- Cost-Effective Content Production: Generating videos with AI can be more cost-effective than traditional methods, especially for small businesses with limited budgets.

Market Opportunities:

- Development and Localization of Text-to-Video Tools: There’s a growing market for text-to-video tools tailored to Southeast Asian languages and cultural contexts.

- Training and Support Services: Businesses and individuals need training and support to effectively use and leverage these new tools.

- Content Creation Services: Companies can offer text-to-video content creation services to businesses and individuals who lack the technical expertise.

- Educational Content Development: There’s a demand for culturally relevant and engaging educational content created using text-to-video technology.

- Marketing and Advertising Services: Agencies can offer text-to-video-based marketing and advertising solutions for businesses targeting Southeast Asian markets.

Challenges and Considerations:

- Ethical Concerns: Deepfakes and potential misuse of the technology raise ethical concerns that need to be addressed.

- Digital Divide: Unequal access to technology and the internet could exacerbate existing inequalities.

- Data Privacy: Data security and privacy issues need to be carefully considered when using and developing these tools.

Overall, text-to-video technology has the potential to significantly impact Southeast Asia by fostering education, communication, and creativity. However, ethical considerations and addressing the digital divide are crucial to ensure responsible and equitable development and use of this technology.

Conclusion:

This review concludes by commending UC Berkeley’s groundbreaking research and open-source initiatives. It highlights the transformative potential of text-to-video technology and encourages viewers to explore UC Berkeley’s models themselves. While commercial offerings like OpenAI SORA and Gemini 1.5 Pro attract attention, the review emphasizes the accessibility and innovation of UC Berkeley’s contributions to the field.

In summary, text-to-video technology has the potential to greatly impact Southeast Asia by promoting education, communication, and creativity. However, ethical considerations and addressing the digital divide are essential to ensure responsible and equitable development and use of this technology.

Takeaway Key Points:

- UC Berkeley’s research on Blockwise Parallel Transformer and Ring Attention forms the foundation for recent advancements in text-to-video technology.

- Their open-source initiative provides access to powerful, trainable models capable of processing multimodal sequences.

- UC Berkeley’s text-to-video generation predates commercial offerings and showcases the potential of open research initiatives.

- While commercial solutions like Gemini 1.5 Pro offer impressive capabilities, UC Berkeley’s contributions remain significant in the field of natural language processing and multimodal AI.

Related References: