动画拆解 AI 大模型核心 – 10 分钟搞懂 Transformer!用毒毒毒蛇,毒蛇会不会被毒毒死?

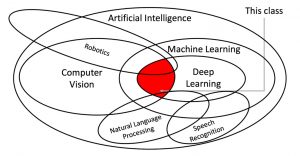

动画可视化让复杂概念变简单: Transformer 涉及多维矩阵运算、注意力权重计算等抽象概念,对初学者来说极具挑战性。通过动画演示,可以将这些看不见的数学过程转化为直观的视觉流程。 动画能够展示数据在编码器和解码器之间的流动路径,演示注意力机制如何"查看"不同单词,以及词嵌入如何在高维空间中移动。这种动态可视化大大降低了学习门槛,让非技术背景的学习者也能理解 AI 大模型的核心原理。 相比静态图表和枯燥公式,动画提供了更生动的学习体验,帮助观众建立直觉性理解,而不仅仅是死记硬背概念。